- The CyberLens Newsletter

- Posts

- OpenClaw AI and the Expansion of Autonomous Cyber Risks

OpenClaw AI and the Expansion of Autonomous Cyber Risks

The Arrival of a New Kind of Machine Actor

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.

📜💻 Interesting Tech Fact:

Before there was such a thing as modern open-source platforms, early collaborative software projects in the 1960s had quietly shared source code across academic institutions to improve reliability rather than innovation. This practice laid the groundwork for today’s open ecosystems, proving that transparency was originally about trust and resilience—not speed 🚀.

Introduction

The rapid ascent of OpenClaw AI marks a pivotal shift in how digital work is executed. Unlike traditional AI tools that wait for prompts and produce outputs, OpenClaw represents a class of autonomous agents designed to act. It plans, decides, executes, adapts, and repeats—often without continuous human involvement. This transition from reactive systems to self-directed agents is not simply another productivity upgrade. It is the introduction of a new type of actor inside digital environments, one that operates at machine speed, across multiple systems, and with increasingly broad authority.

What makes OpenClaw AI noteworthy is not novelty alone, but momentum. The platform has captured attention because it aligns perfectly with current enterprise pressures: reduce operational friction, accelerate workflows, and scale decision-making beyond human limits. Yet the very traits that make OpenClaw powerful also place it squarely at the center of a growing security reckoning. When autonomy expands faster than governance, risk quietly compounds.

What OpenClaw AI Actually Is

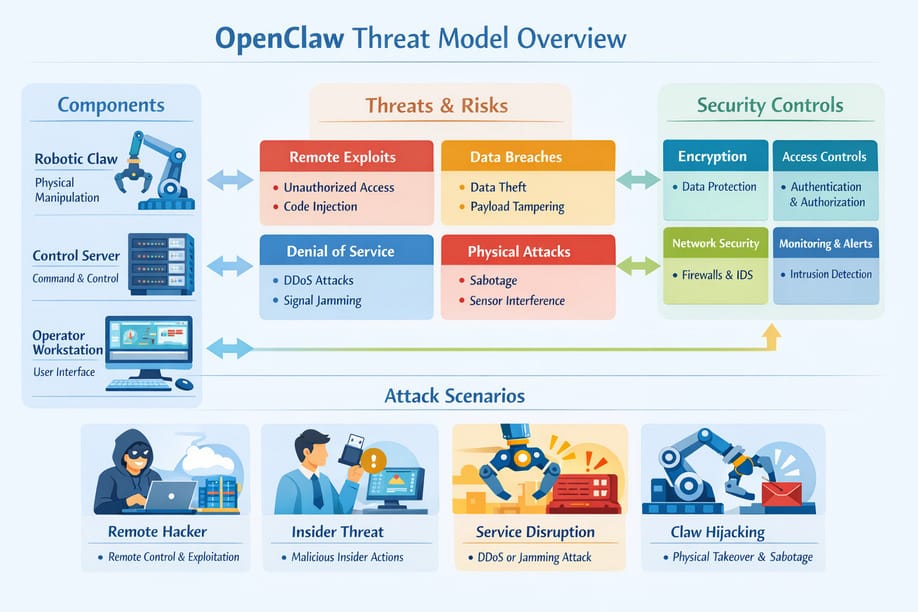

OpenClaw AI is best understood as an agentic orchestration platform. It is not a single model, chatbot, or script. Instead, it functions as a control layer that coordinates reasoning engines, tools, APIs, data sources, and execution environments into a cohesive autonomous workflow. Once configured, the agent can interpret objectives, break them into tasks, select tools, execute actions, evaluate outcomes, and iterate—all with minimal human direction.

At its core, OpenClaw combines large language model reasoning with operational capabilities. This allows it to move beyond analysis into action. It can write code, deploy infrastructure changes, query databases, interact with SaaS platforms, trigger workflows, and communicate results back to users or systems. In many environments, it effectively becomes a non-human operator with broad system reach.

Crucially, OpenClaw is designed to be extensible. Its value grows as more tools, plugins, and integrations are added. This modularity accelerates adoption but also expands complexity. Each new capability introduces additional trust relationships, permission scopes, and potential failure points. The agent’s intelligence is not just in its reasoning, but in the breadth of its access.

Who Is Building and Shaping OpenClaw AI

OpenClaw AI is shaped by a hybrid development model that blends open-source collaboration with structured platform governance. Rather than being driven by a single closed enterprise roadmap, its evolution reflects a community-oriented approach where contributors build connectors, task modules, and enhancements that extend the agent’s reach.

This development style fuels rapid innovation. It also introduces asymmetry. Security maturity often lags functionality because contributions prioritize capability over control. In ecosystems like this, guardrails are frequently retrofitted rather than designed from the start. The result is a platform that evolves faster than the policies meant to constrain it.

Developers working on OpenClaw tend to come from automation engineering, AI research, DevOps, and productivity tooling backgrounds. Their focus is efficiency and scale. Security considerations are present, but they are rarely the central design driver. This imbalance is not malicious—it is structural. When speed defines success, risk becomes externalized to downstream users.

How OpenClaw AI Is Used in Real Environments

OpenClaw AI is already being deployed across a wide spectrum of use cases. In engineering teams, it automates code generation, testing, deployment, and monitoring. In operations, it handles ticket triage, infrastructure changes, and system diagnostics. In business contexts, it manages research, reporting, content generation, and data synthesis.

What unites these use cases is delegated authority. Users do not merely ask OpenClaw for suggestions; they authorize it to act on their behalf. This delegation transforms the agent into a proxy identity that inherits the privileges of its human operator—or worse, accumulates privileges across roles.

As organizations become more comfortable with autonomous execution, OpenClaw agents are increasingly granted persistent access rather than temporary tokens. This persistence enables long-running workflows but also creates standing attack surfaces that operate continuously, often outside traditional monitoring windows.

Who the Primary Users Are

The user base for OpenClaw AI spans multiple roles, each with distinct risk implications:

Developers seeking to accelerate build and deployment cycles

DevOps teams managing complex cloud environments

Security teams experimenting with automated detection and response

Data analysts automating ingestion and reporting

Business users delegating research and operational tasks

The diversity of users matters because it fragments accountability. When an agent misbehaves, responsibility becomes diffuse. Was the fault in configuration, training data, tool integration, or model reasoning? This ambiguity complicates incident response and governance enforcement.

The Tools Commonly Used in Combination with OpenClaw AI

OpenClaw rarely operates alone. Its effectiveness depends on the ecosystem it connects to, which typically includes:

Large language models for reasoning and planning

Cloud APIs for infrastructure and service control

CI/CD pipelines for automated deployment

Databases and data warehouses for retrieval and updates

SaaS platforms for communication and workflow execution

Code execution environments for dynamic task fulfillment

Each integration extends the agent’s reach. Collectively, they create a mesh of trust relationships that are difficult to visualize and even harder to secure comprehensively.

Excessive Permission Accumulation

Autonomous agents thrive on access. Over time, OpenClaw instances often accumulate permissions beyond their original scope. This occurs through convenience-driven expansions, shared credentials, and broad API keys.

When permissions are excessive, compromise impact multiplies. An attacker does not need lateral movement if the agent already spans multiple systems. The agent becomes a supernode of authority, collapsing segmentation strategies that organizations rely on for containment.

Autonomous Data Exposure and Leakage

OpenClaw can access, transform, and transmit data without real-time human validation. This capability is essential for automation but dangerous when handling sensitive information.

Data leakage may occur unintentionally through summarization, logging, or external API calls. Because the agent operates legitimately, such exposure often bypasses traditional data loss prevention controls that focus on anomalous human behavior.

Prompt Injection and Indirect Manipulation

Autonomous agents are vulnerable to indirect manipulation through crafted inputs embedded in data sources, documents, or API responses. OpenClaw may interpret these inputs as instructions, altering its behavior without explicit compromise.

Unlike classic exploits, prompt manipulation exploits trust in language interpretation. The agent believes it is acting correctly, which makes detection exceptionally difficult.

Third-Party Toolchain Risk

Each plugin or integration introduces supply chain exposure. OpenClaw inherits the security posture of every tool it uses. A single compromised dependency can cascade through the agent’s workflow.

Because integrations are often community-developed, assurance levels vary widely. Security reviews are inconsistent, and update mechanisms can introduce new risks without clear visibility.

Lack of Deterministic Behavior

OpenClaw’s reasoning is probabilistic. Given the same objective, it may choose different actions over time. This variability complicates threat modeling and compliance validation.

Security teams struggle to define “expected behavior” when outcomes are not fixed. This undermines rule-based monitoring and makes post-incident analysis more complex.

Auditability and Attribution Gaps

When an autonomous agent takes action, logs may record what happened but not why. The absence of clear decision rationale hinders forensic investigation.

Attribution becomes murky. Was an action intentional, emergent, or influenced by external manipulation? Without clarity, accountability erodes.

Why Security Teams Must Be on Alert Now

OpenClaw AI represents a structural change, not a transient trend. Security teams face a future where non-human actors operate continuously inside critical systems. These actors do not get tired, do not forget credentials, and do not intuitively recognize danger.

Traditional security assumes humans as the primary risk vector. Autonomous agents invert this assumption. They are always active, always connected, and increasingly empowered. Ignoring this shift leaves organizations defending yesterday’s perimeter against tomorrow’s reality.

How OpenClaw Could Alter the Future of Cybersecurity

The rise of autonomous agents forces a redefinition of core security concepts. Identity must extend beyond humans. Privilege management must account for evolving agents. Monitoring must shift from static rules to behavioral understanding.

Security tooling will need to become agent-aware. Governance frameworks must incorporate autonomy thresholds, ethical constraints, and kill-switch mechanisms. The organizations that adapt early will shape safer patterns of use. Those that delay will inherit compounded risk.

Structured Risk Breakdown for Security Leaders

Autonomous agents like OpenClaw AI do not introduce a single category of risk; they introduce an interdependent system of risks that reinforce one another. For security leaders, understanding these dimensions in isolation is insufficient. Each risk domain below represents a structural shift in how authority, control, and failure propagate across modern environments. When combined, they redefine what “secure by design” must mean in an agent-driven enterprise.

Identity Sprawl Across Systems

OpenClaw AI operates as a persistent, non-human identity that frequently spans multiple platforms, services, and environments simultaneously. Unlike human identities, which are constrained by employment lifecycles and role definitions, agent identities often persist indefinitely and evolve organically. Over time, the agent becomes embedded across systems—cloud platforms, CI/CD pipelines, SaaS tools, data stores—without a centralized identity governance framework tracking its full footprint.

This sprawl erodes traditional identity boundaries. Security teams may know that an agent exists, but not where it operates, what credentials it holds, or how those credentials interrelate. When identity visibility is fragmented, revocation becomes incomplete. A “disabled” agent in one system may remain fully operational in another, creating ghost identities that silently retain access. For attackers, these orphaned identities represent low-noise, high-value entry points that often evade routine access reviews.

Privilege Escalation Through Convenience

Autonomous agents are frequently granted broader permissions than necessary to prevent workflow interruptions. What begins as a narrowly scoped role expands incrementally as users encounter edge cases and friction. Each added permission is justified as a one-time exception, but over time these exceptions accumulate into systemic overprivilege.

This convenience-driven escalation undermines the principle of least privilege. Unlike humans, agents do not self-regulate or question the necessity of access. Once granted, privileges are exercised programmatically and repeatedly. If compromised or manipulated, an overprivileged agent can execute destructive actions at scale without triggering suspicion, because its behavior aligns with previously authorized capabilities. In effect, the agent becomes a pre-approved insider with no contextual judgment.

Reduced Human-in-the-Loop Validation

OpenClaw AI is designed to minimize human intervention, which is precisely where its efficiency gains originate. However, this reduction in oversight removes a critical control point: human intuition. Humans notice when something “feels wrong,” even if it technically appears correct. Autonomous agents lack this intuition and operate strictly within their learned or inferred logic.

When validation checkpoints are removed or weakened, errors and manipulations propagate unchecked. A flawed assumption, ambiguous instruction, or malicious input can cascade across systems before detection. Security teams often discover issues only after downstream effects manifest—data corruption, unauthorized changes, or exposure events—by which point root cause analysis is significantly harder. The absence of human-in-the-loop mechanisms transforms small mistakes into systemic failures.

Expanded Attack Surface via Integrations

Every tool, API, and plugin connected to OpenClaw AI extends its operational reach—and its attack surface. The agent inherits not only functionality but also the security posture of each integration. Weak authentication, insecure defaults, or compromised dependencies in any connected tool become indirect vulnerabilities within the agent’s workflow.

This integration-driven expansion complicates risk assessment. Traditional security models evaluate applications individually, but autonomous agents operate across ecosystems. An attacker does not need to exploit OpenClaw directly; they only need to compromise the weakest linked system. Once inside, the agent’s trusted status allows malicious actions to move laterally under the guise of legitimate automation. The result is a blended threat model where boundaries between systems dissolve.

Limited Explainability of Decisions

OpenClaw AI makes decisions based on probabilistic reasoning rather than deterministic logic. While logs may capture actions taken, they rarely provide a complete, intelligible explanation of why a specific path was chosen over alternatives. This lack of explainability creates significant challenges for security governance.

Without clear reasoning trails, security teams struggle to distinguish between intended behavior, emergent behavior, and external influence. During incidents, this opacity delays response and weakens confidence in remediation. Leaders are left answering difficult questions—regulatory, legal, and executive—without definitive evidence. Over time, limited explainability erodes trust in autonomous systems, even when they function correctly.

Accelerated Blast Radius During Failure

Autonomous agents operate at machine speed and scale. When something goes wrong, the impact is not gradual; it is immediate and expansive. A misconfigured instruction or compromised integration can trigger thousands of actions in seconds, affecting systems that would normally be isolated by time and process.

This acceleration compresses the window for detection and response. Traditional incident response assumes a progression from anomaly to exploitation to impact. With OpenClaw AI, these phases can collapse into a single moment. By the time alerts surface, the damage may already be complete. The blast radius is no longer defined by network topology alone, but by the agent’s authority and reach.

Why These Risks Demand Executive Attention

Each of these risk domains challenges a foundational assumption in cybersecurity: that control is slower than failure. Autonomous agents invert that assumption. They act faster than humans can observe, decide, or intervene. For security leaders, the implication is clear—governance must be proactive, architectural, and continuous.

Managing OpenClaw AI risk is not about restricting innovation; it is about aligning autonomy with accountability. Organizations that treat these risks as isolated technical issues will struggle. Those that recognize them as systemic shifts in digital power will be better positioned to harness autonomy without surrendering control.

Operational Warning Signs Organizations Should Watch For

Security teams should monitor for patterns that indicate emerging agent risk:

Agents operating outside defined schedules

Gradual expansion of access scopes

Actions spanning unrelated systems

Limited logging of decision rationale

Dependency updates without review

Difficulty disabling or pausing agents

These indicators often precede incidents rather than follow them.

Strategic Controls That Will Matter Most

Mitigating OpenClaw risk requires intentional design:

Explicit privilege boundaries for agents

Continuous access review and expiration

Behavioral monitoring tailored to agents

Controlled toolchain onboarding

Human override mechanisms

Clear ownership and accountability

These controls are not optional enhancements. They are prerequisites for safe autonomy.

The Broader Implications for the Cyber Profession

Autonomous agents will not replace security professionals, but they will redefine their role. The focus will shift from manual enforcement to systemic design. Security becomes less about blocking actions and more about shaping environments where safe behavior emerges naturally.

This evolution demands new skills, new metrics, and new mental models. The profession must adapt as quickly as the technology it seeks to secure.

Final Thought

OpenClaw AI embodies both promise and peril. It offers a glimpse of a world where machines shoulder cognitive labor, freeing humans for higher-order work. Yet it also reveals how easily autonomy can outpace understanding.

The true challenge is not whether autonomous agents should exist, but whether organizations can cultivate the discipline required to govern them responsibly. Security has always been about managing trust under uncertainty. OpenClaw magnifies that challenge to unprecedented scale.

Those who confront this reality head-on—who rethink identity, privilege, and accountability for a machine-driven era—will define the next chapter of cybersecurity. Those who treat autonomy as just another tool will discover too late that tools which act can also decide.

Subscribe to CyberLens

Cybersecurity isn’t just about firewalls and patches anymore — it’s about understanding the invisible attack surfaces hiding inside the tools we trust.

CyberLens brings you deep-dive analysis on cutting-edge cyber threats like model inversion, AI poisoning, and post-quantum vulnerabilities — written for professionals who can’t afford to be a step behind.

📩 Subscribe to The CyberLens Newsletter today and Stay Ahead of the Attacks you can’t yet see.